The Manus Moment and the Future of AI Agents

In early 2025, Manus AI burst onto the scene and rapidly became a major player in the AI agent space. Unlike tech giants that were busy refining foundational models in the lab, Manus focused on delivering a user-facing agent. It positioned itself as a general workflow agent adept at tool use – something the big players hadn’t yet packaged for end-users. This clever positioning filled a market gap: while OpenAI, Google, and others honed their next-gen models, Manus offered an autonomous assistant that could execute tasks for users here and now. By orchestrating existing large language models through prompts and tool calls, Manus effectively became an "application layer" innovation, gaining a hype-driven early-mover advantage.

A Well-Designed Agent for Immediate Utility

Manus’s appeal lies in how it bridges human intent and tangible outcomes. Instead of just chitchatting, it aims to get real work done. At launch, it demonstrated practical skills like travel planning, financial analysis, building presentations, and comparing insurance policies autonomously.

It achieves this by integrating with external tools – browsing the web, controlling a code editor, and more – so it can act on user requests, not just reply with text. This tool-centric design meant Manus could do things traditional chatbots couldn’t. This was a refreshing contrast for users at a time when most “AI” products were either pure chatbots or behind-the-scenes model upgrades. By focusing on an intuitive workflow and visible results, Manus captured the imagination of tech enthusiasts and early adopters looking for AI that acts, not just talks.

The result was immediate traction – Manus’s demo video surpassed 1M views within 20 hours of launch and its Discord community swelled to over 138,000 members in just days. Now there are over 1M users on the waitlist. Such frenzy even led invite codes to be resold for thousands of dollars, underscoring the early attention and user adoption it garnered.

The Limitations of Manus’s Prompt-Chained Workflows

While Manus’s approach yielded short-term success, its prompt-based workflow design has fundamental limitations when viewed as a general AI agent. Manus (like other “AutoGPT-era” agents) relies on pre-defined sequences of prompts and fixed tool paths. This means it doesn’t truly think on the fly – it follows a script. In complex or prolonged tasks, such rigidity shows cracks. Early testers observed familiar failure modes from the AutoGPT days:

Lack of deep planning: The agent often lacks true strategic planning and can get stuck mid-task, unable to figure out what to do next

Context limitations: It struggles with maintaining long-term context, frequently losing coherence on tasks that run beyond a few minutes

Error compounding: Over multi-step processes, small errors accumulate. Without adaptive reasoning, Manus can veer off course until the task fails

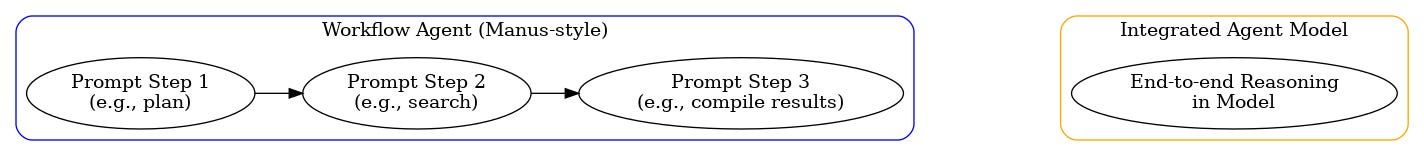

In short, Manus’s “pre-arranged prompts and tool paths” approach may work for straightforward tasks, but it hits a wall with more complex problems. It’s a bit like a recipe: great if the situation matches the script, but easily thrown off by unexpected ingredients. For an agent marketed as “fully autonomous,” these brittle failure modes reveal that Manus is still more of a clever workflow engine than a generally intelligent solver.

Native Reasoning: The Path to True General Agents

The next generation of AI agents is emerging from a very different approach: building the planning and reasoning capabilities inside the model itself. Instead of depending on external prompt chains to drive logic, the model learns to handle tasks through its own internal thought process. This means training AI systems that can autonomously search, plan, and act without a human-scripted game plan. Researchers believe this will be achieved through a combination of reinforcement learning and advanced reasoning integrated into model training. In fact, OpenAI revealed that for DeepResearch they trained a new model from scratch rather than simply wrapping their latest GPT with prompts. With tool use, in other words, the model itself learns to perform multi-step tasks that Manus would have to break into a scripted workflow. This kind of agent-native model can flexibly adapt its “workflow” on the fly because the workflow is not pre-written – it’s figured out in real time by the agent’s own reasoning.

This shift to model-embedded reasoning has big implications. Tool use and planning become part of the model’s capabilities, not something managed by an external coordinator. They also carry their “memory” and strategy internally, potentially remembering long-term goals or past mistakes in a more robust way than today’s context-window-limited systems. In the long run, if the model can intelligently handle an entire task on its own, the myriad of wrappers and orchestrators (the so-called “application layer”) may become obsolete. In that future, AI models themselves are the product, delivering value directly through their native intelligence, rather than via heavily engineered workflows.

Efficiency Matters: Prompt Chains vs. Integrated Reasoning

Agent-based Al consumes 1,000 times more tokens than chatbots as one task for Al agent involves many steps to solve. For example, the token consumption of an Al Agent product with 1M users is equivalent to a chatbot product with 1B users. Given this, there’s another pragmatic reason the industry is eyeing a move away from prompt-chained agents like Manus: efficiency, especially token efficiency. Workflow agents tend to be even more hungry in terms of token consumption since multi-step task might require numerous back-and-forth LLM calls, each carrying overhead in the form of repeated instructions or state. Best practices for those using such agents included advice like “batch multiple queries into a single prompt to reduce overhead from repeated input tokens”– essentially a workaround for the inefficiency of chain-of-thought spread across many calls.

By contrast, an integrated agentic model can handle a multi-step reasoning task in a more fluid, contiguous way. If a single model invocation can internally perform, say, five reasoning hops and tool interactions, it may use far fewer tokens than five separate API calls would. There’s less duplication of prompt context and a more continuous flow of thought. Moreover, as model context lengths grow (and with architectures that allow persistent memory), an integrated agent can work through lengthy tasks without resetting its memory every few minutes. This token efficiency and coherence give deep reasoning agents a clear long-term edge. In sum, the architecture of these next-gen agents isn’t just a technical elegance; it’s likely an economic advantage as well.

The Long-Term Outlook: Beyond Manus

Manus deserves credit for its vision and timing. It validated a hunger in the market for AI that feels useful out-of-the-box, inspiring many to imagine what’s possible. By cleverly sidestepping the need for novel model training, Manus showed that with existing LLMs plus a smart tool-oriented prompt design, you can deliver a compelling agent experience. This won it early users, media buzz, and a place in the AI zeitgeist of 2025.

However, Manus’s legacy might be that of a stepping stone rather than the end-game. Its limitations underscore where the real solution lies: in more deeply intelligent models. The future general AI agents that captivate and serve us will think their way through tasks with the competence that comes only from training the AI to reason, not just to respond. We’re already seeing the first glimmers of these advanced agents in research labs and select beta releases – systems that can autonomously browse, code, plan, and execute with minimal human hand-holding. As those evolve with deeper reasoning and adaptive tool use, the bar will rise—not just for completing tasks, but for navigating ambiguity, learning from failure, and collaborating like a thinking partner. The real question isn’t whether an agent can follow or generate a “recipe,” but whether it can understand why a task matters, when to change course, and how to self-improve over time.